AI as a Gatekeeper of Knowledge: Benefits, Challenges and Examples from SLR

Artificial intelligence is revolutionizing systematic literature reviews, dramatically reducing the time required while introducing new methodological considerations. This article critically examines AI’s role as both enabler and gatekeeper in the SLR process, analyzing its benefits for efficiency, challenges to reliability, and emerging applications that are reshaping academic research practices.

Martti Asikainen & Dr. Janne Kauttonen, 15.5.2025

The rapid development of artificial intelligence (AI) has opened up new possibilities in both academia and industry. Especially generative AI models and large language models (LLMs) have proven valuable tools for processing large volumes of information, such as scientific literature. In this article, we examine the systematic literature review (SLR), a widely used and independent academic method. Its objective is to identify and assess all relevant literature related to a topic, phenomenon, or research question, enabling the drawing of conclusions through an impartial, transparent, and replicable methodology (Kitchenham & Charters 2007).

AI Tools Supporting Systematic Literature Reviews

Systematic literature reviews have grown in popularity in recent years, especially in business and management research, although they remain most common in medicine and health technology (Klatt 2023). Conducting a literature review has traditionally been a labor-intensive manual process, so it is no surprise that AI has rushed in to assist. Finland’s export of health technology products has grown nearly every year over the past two decades (Business Finland 2023), so methods supporting this process are highly relevant and needed. AI can automate routine tasks and manage large datasets, freeing up experts for more demanding work.

Assessing medicine and health technology requires processing growing volumes of data, systematic documentation, and an evidence-based approach. Clinical device and pharmaceutical trials, as well as medical device regulatory requirements, present significant resource challenges for smaller companies. Systematic literature reviews are a crucial part of this process, but manual execution is time-consuming and susceptible to human error. Unlike humans, AI does not suffer from fatigue, performance variation, a wandering mind, or change blindness, making it ideal for routine and tedious tasks.

Typically, a single literature review project can generate hundreds or even thousands of search results from research databases (Asikainen 2024). Recent studies and corporate cases suggest that AI can speed up especially the screening and data extraction phases, thus improving the identification and analysis of studies (e.g., Bolaños et al. 2024). AI also enables broader reviews where language or the number of searches are no longer limitations. The human workload is significantly reduced when AI handles simpler search tasks, allowing researchers to focus only on the most complex cases.

AI applications can also help researchers identify key themes and gaps from vast bodies of knowledge (Wagner et al. 2022; Asikainen 2024). Health technology has strong potential to lead the adoption of AI. Today, AI—practically meaning large language models—has reached a level sufficient even for demanding information retrieval and screening tasks. Based on research trends and technological development, it’s foreseeable—even without a crystal ball—that AI will soon transform how systematic literature reviews are conducted and data gathered. This, in turn, is expected to improve the accuracy of analysis and strengthen evidence-based decision-making, accelerating innovation in the field.

Accuracy and Efficiency Without Additional Costs

A wide range of AI-based tools are currently available on the market to support different phases of systematic literature reviews, from locating studies to organizing information. Examples include deep research tools and search agents like ChatGPT, Gemini, Perplexity, Meta AI, and Grok. Some tools, such as Consensus, focus on citation analysis and help understand how research has been supported or contested in subsequent literature. Others, like NotebookLM, assist in managing large numbers of research papers and identifying overlaps. AI services such as Elicit and ResearchRabbit create visual citation maps and author networks, clarifying connections and structures (e.g., Chaturvedi 2025).

If off-the-shelf tools are too limited or costly, developing a custom application is a viable alternative. All major AI models are available via API interfaces (e.g. OpenAI API), and thus can be easily integrated into proprietary solutions. Nearly equally powerful open-source AI models are also available for free, granting full control to the user. A good example is a Finnish AI Region (FAIR) client company that developed its own AI-based solution for automating systematic literature reviews. The application significantly improved the company’s ability to source literature for medical device regulation (MDR) and in-vitro diagnostic regulation (IVDR) clinical assessments. The application was validated using a dataset of over a thousand medical articles reviewed and categorized by clinical experts.

Thanks to the application, the company enhanced the processing of its findings and improved the accuracy and reliability of assessments. For product-specific searches, the application achieved a hit accuracy of up to 100%, and 93% accuracy across the full dataset of more than a thousand articles. Productivity also increased, as the company could manage more projects and produce more extensive reviews without significant additional costs or staffing.

The Importance of Reporting and Oversight

The adoption of AI applications must always consider the associated risks. At worst, improper use can compromise the reliability and accuracy of a review, and damage the reputation of responsible researchers, research centers, universities, or companies. Therefore, it is vital to iteratively develop methods to detect and prevent errors from appearing in published results. These include fabricated sources and/or misinterpretations of real sources by the AI.

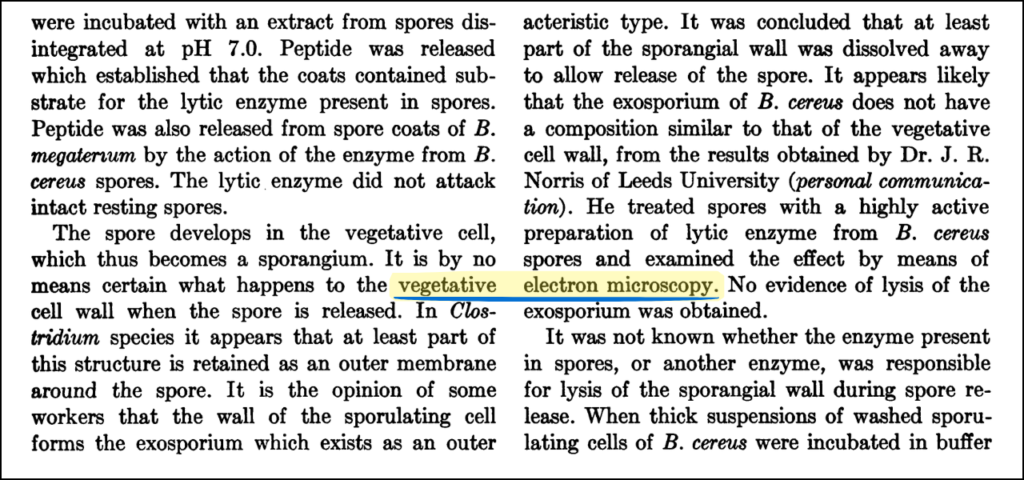

Modern AI and language models can produce plausible but incorrect information, which may be included in a review if not supervised (Fabiano et al. 2024). Once published, such misinformation can spread in the academic world. A well-known example is the “vegetative electron microscopy” case (Snoswell et al. 2025), where a meaningless phrase appeared in over 20 academic publications starting in 2017. It originated from a digitization error in a 1950s scientific article, compounded by a translation mistake from Farsi.

The case illustrates how errors can infiltrate scientific literature and bypass peer review. Several recent studies express concern about declining scientific integrity, as seen in the increasing use of certain adjectives and adverbs (e.g., Liang et al. 2024; Gray 2024; Haider et al. 2024; Leonard 2025).

Many view AI as a threat to scientific credibility (e.g., Conroy 2023). For these reasons, it is particularly important for analysts and researchers to understand the limitations of AI and use their expertise to ensure that AI supports rather than undermines the quality and credibility of reviews (Fabiano et al. 2024). The main challenges with large language model-based assistants include lack of domain-specific expertise, hallucinations, and the “black box” issue, where the algorithm, training data, or model are obscured for intellectual property reasons (Bolaños et al. 2024). Thus, fully leveraging AI in SLRs requires human-centric and trustworthy workflows, validated through open, standardized evaluation frameworks that assess performance, usability, and transparency simultaneously.

From a research ethics perspective, it is also desirable to report on the use of AI applications, as transparency is a cornerstone of science (Gray 2024). Most scientific publishers permit AI use in refining publications but require that it be clearly and understandably disclosed (Gray 2024; Rantanen & Sahlgren 2024). The description may include use in information retrieval, ideation, language checking, or translation.

Our ecommendations for Using AI in SLR

Our recommendations for companies considering AI and automation in systematic literature reviews (adapted from Fabiano et al. 2024):

Human oversight is essential. AI does not understand context like humans and may misrepresent findings or overlook crucial information. Errors can lead to faulty conclusions or poor decision-making.

Tools that provide sources can prevent errors. Such tools enhance traceability and reduce misinformation risk, but do not eliminate the need for expert evaluation.

Users must understand the limitations of their tools. All AI models differ, with unique strengths and weaknesses. AI recommendations should be critically assessed, and users should master the software underlying statistical analyses.

Reporting practices need improvement. AI tool usage is not always transparently reported, hindering the reproducibility vital to science. For language models, both the model and prompts should be openly documented to allow method replication.

Ethical considerations matter. Not all researchers have access to paid tools, which may exacerbate inequality. A balance must also be struck between openness and data privacy.

AI cannot be an author. Responsibility and error correction must lie with humans, as AI lacks autonomy and accountability.

This text was written as part of the AI and Equity at Work Communities project funded by Haaga-Helia University of Applied Sciences and Finnish Work Environment Fund.

Contact us

Martti Asikainen

RDI Communications Specialist and Consultant

+358 44 920 7374

martti.asikainen@haaga-helia.fi

Janne Kauttonen

Senior Research

+358 294471397

janne.kauttonen@haaga-helia.fi

References

Asikainen, M. (2024). Suomalaisfirma valjasti tekoälyn lääkinnällisten laitteiden tutkimusten tueksi – kehitti FAIR:in kanssa työkalun alle neljässä kuukaudessa. Published on Finnish AI Region’s website, December 13, 2024. Accessed May 2, 2025.

Bolaños, F., Salatino, A., Osborne, F., & Motta, E. (2024). Artificial intelligence for literature reviews: opportunities and challenges. Artificial Intelligence Review, 57(259). Springer Nature, London.

Business Finland. (2023). Suomessa kehitetyt ratkaisut parantavat terveyssektorin kantokykyä maailmalla. Published on Business Finland’s website, August 21, 2023. Helsinki. Accessed May 3, 2025.

Chaturvedi, A. (2025). AI’s Impact on Literature Review: A Comprehensive Review of the Best Tools. Published on Medium, January 23, 2025. Accessed May 3, 2025.

Conroy, G. (2023). Scientific sleuths spot dishonest ChatGPT use in papers. Published in Nature, September 8, 2023. Nature Portfolio, Springer Nature, London. Accessed May 4, 2025.

Fabiano, N., Gupta, A., Bhambra, N., Luu, B., Wong, S., Maaz, M., Fiedorowicz, J. G., Smith, A. L. & Solmi, M. (2024). How to optimize the systematic review process using AI tools. JCPP Advances, 4(2). Wiley-Blackwell, Hoboken.

Gray, A. (2024). ChatGPT “contamination”: estimating the prevalence of LLMs in the scholarly literature. ArXiv Preprint. Cornell University (arXiv).

Haider, J., Söderström, K. R., Ekström, B. & Rödl, M. (2024). GPT-fabricated scientific papers on Google Scholar: Key features, spread, and implications for preempting evidence manipulation. Misinformation Review, 5(5), pp. 1–16. Harvard Kennedy School, Cambridge.

Kitchenham, B. & Charters, S. M. (2007). Guidelines for performing Systematic Literature Reviews in Software Engineering. Joint Report, Keele University and University of Durham.

Klatt, F. (2023). Business Systematic Literature Reviews. Die Bibliothek. Wirtschaft & Management workshop, June 30, 2023. Technische Universität Berlin. Accessed May 3, 2025.

Leonard, J. (2025). AI-enabled fakery has ‘infiltrated academic publishing’ say researchers. Published in Computing, February 17, 2025. The Channel Company. Accessed May 3, 2025.

Liang, W., Izzo, Z., Zhang, Y., Lepp, H., Cao, H., Zhao, X., Chen, L., Ye, H., Liu, S., Huang, Z., McFarland, D. A. & Zou, J. Y. (2024). Monitoring AI-Modified Content at Scale: A Case Study on the Impact of ChatGPT on AI Conference Peer Reviews. ArXiv Preprint. Cornell University (arXiv).

Rantanen, V. & Sahlgren, O. (2024). Tekoälyn hyödyntäminen tutkimuksessa vastuullisuuteen ja kestävyyteen liittyvät näkökulmat huomioiden. Presentation at Tampere University, Faculty of Management and Business, September 24, 2024. Accessed May 3, 2025.

Snoswell, A. J., Witzenberger, K. & El Masri, R. (2025). A weird phrase is plaguing scientific papers – and we traced it back to a glitch in AI training data. Published in The Conversation, April 15, 2025. Melbourne. Accessed May 14, 2025.

Strange, R. E. (1959). Cell Wall Lysis and the Release of Peptides in Bacillus Species. Bacteriological Reviews, 23(1). American Society for Microbiology, Washington.

Wagner, G., Lukyanenko, R. & Paré, G. (2022). Artificial intelligence and the conduct of literature reviews. Journal of Information Technology, 37(2), pp. 209–226. Sage Journals, Thousand Oaks.